Training Data for Generative AI

Get the training data for generative AI models you need – high-quality, human-validated, and secure across all modalities.

LXT delivers the multimodal training data and expert evaluation services you need to scale responsible generative AI systems. From text-only LLMs to multimodal, voice-enabled agents, we support every architecture with tailored data, secure workflows, and human-in-the-loop validation.

Our training data for Generative AI by modality

Text

-

Domain-specific corpus creation and collection

-

Prompt-response generation (standard and adversarial)

-

RLHF (Reinforcement Learning from Human Feedback)

-

Semantic chunking, classification, and redaction

-

Toxic language detection and ethical annotation

Audio

-

Speech and non-speech audio collection across 1,000+ locales

-

Emotional and prosodic labeling for voice AI

-

TTS model tuning and validation

-

Speaker labeling, diarization, transcription, and QA

-

Audio redaction and sensitive content filtering

Image

-

Image data sourcing for diverse use cases

-

Caption generation, correction, and enrichment

-

Object, scene, and emotion annotation

-

Bias detection in visual outputs

-

Multimodal alignment with text prompts

Video

-

Collection and curation of scenario-driven video datasets

-

Video captioning and metadata enrichment

-

Action, sentiment, and interaction tagging

-

Safety checks and quality validation for GenAI outputs

-

Language-aligned video QA (subtitles, spoken content)

LLMs

-

Data pipelines for fine-tuning and evaluating large language models

-

Instruction-tuned prompt creation

-

Red teaming for hallucination and vulnerability detection

-

Evaluation tasks with diverse human judgments

-

Ethical scoring and safety testing across domains

Why GenAI leaders work with LXT

Human Intelligence Built-In

Expert-in-the-loop services from prompt tuning to RLHF and ethical evaluation.

Multimodal & Multilingual

Speech, text, image, video – across 1,000+ language locales and cultural contexts.

Precision Across Domains

From legal to finance to retail: customized training data for generative AI aligned to your industry and product.

Robust Model Evaluation

Services for RLHF, red teaming, hallucination testing, safety and bias detection.

Built to Scale

8M+ global crowd, 250K+ domain experts, ISO-certified facilities on 3 continents.

Guaranteed Accuracy

100% quality guarantee. Multi-layer QA, expert review, analytics & gold tasks.

Where LXT fits in your GenAI stack

Generative AI models vary widely in structure, purpose, and data requirements. Whether you're working on a text-only LLM or a multimodal system, each architecture demands a different mix of curated training data, human feedback, and evaluation workflows. The following overview shows common GenAI model types, their training data requirements, and the LXT services that support them.

LLMs & SLMs

Chatbots, assistants, summarization, search

What You Need:

Domain-specific text data, tuned prompts, RLHF and red teaming

LXT Delivers:

Voice AI / TTS

Conversational agents, emotional speech, IVR

What You Need:

Natural-sounding speech, emotion/prosody labels, TTS model validation

LXT Delivers:

Multimodal & VLMs

Text-to-image, video captioning, visual agents

What You Need:

Aligned image-text pairs, metadata, visual QA

LXT Delivers:

RAG-Based Models

Document Q&A, enterprise search, legal/finance bots

What You Need:

Chunked documents, metadata tagging, retrieval optimization

LXT Delivers:

Ethical & Safety GenAI

Bias mitigation, hallucination control, red teaming

What You Need:

Adversarial prompts, diverse judgment, toxicity and PII checks

LXT Delivers:

Personalization Models

Marketing copy, UX text, dynamic content generation

What You Need:

User-segmented data, tone/style control, contextual evaluation

LXT Delivers:

How we deliver training data for Generative AI

From scoped pilot to scalable delivery – designed for enterprise-grade GenAI.

Step-by-Step Process

1. Define scope & success metrics

We work with your team to clarify use case, volume, modalities, and evaluation criteria.

2. Pilot with gold tasks

We launch a small-scale project to calibrate guidelines, quality thresholds, and review flows.

3. Guideline refinement & training

Contributor onboarding, test runs, and domain expert calibration ensure consistency.

4. Scaled production with QA layers

Your generative AI training data is delivered at scale with built-in multi-pass QA, spot checks, and analytics.

5. Human-in-the-loop evaluation

RLHF, output scoring, bias reviews or red teaming performed by trained contributors or domain experts.

6. Secure delivery & feedback loop

Final data and insights are transferred via secure channels; feedback informs continuous improvement.

Quality assurance in Generative AI training data projects

- Multi-pass review workflows

Every data item goes through trained annotators, reviewers, and spot checks. - Gold tasks and benchmarking

Used in pilots and live production to track quality, drift, and annotator performance. - Expert calibration

Domain experts validate labeling guidelines and contribute directly for specialized tasks. - Data analytics dashboards

Real-time accuracy, throughput and performance metrics available upon request.

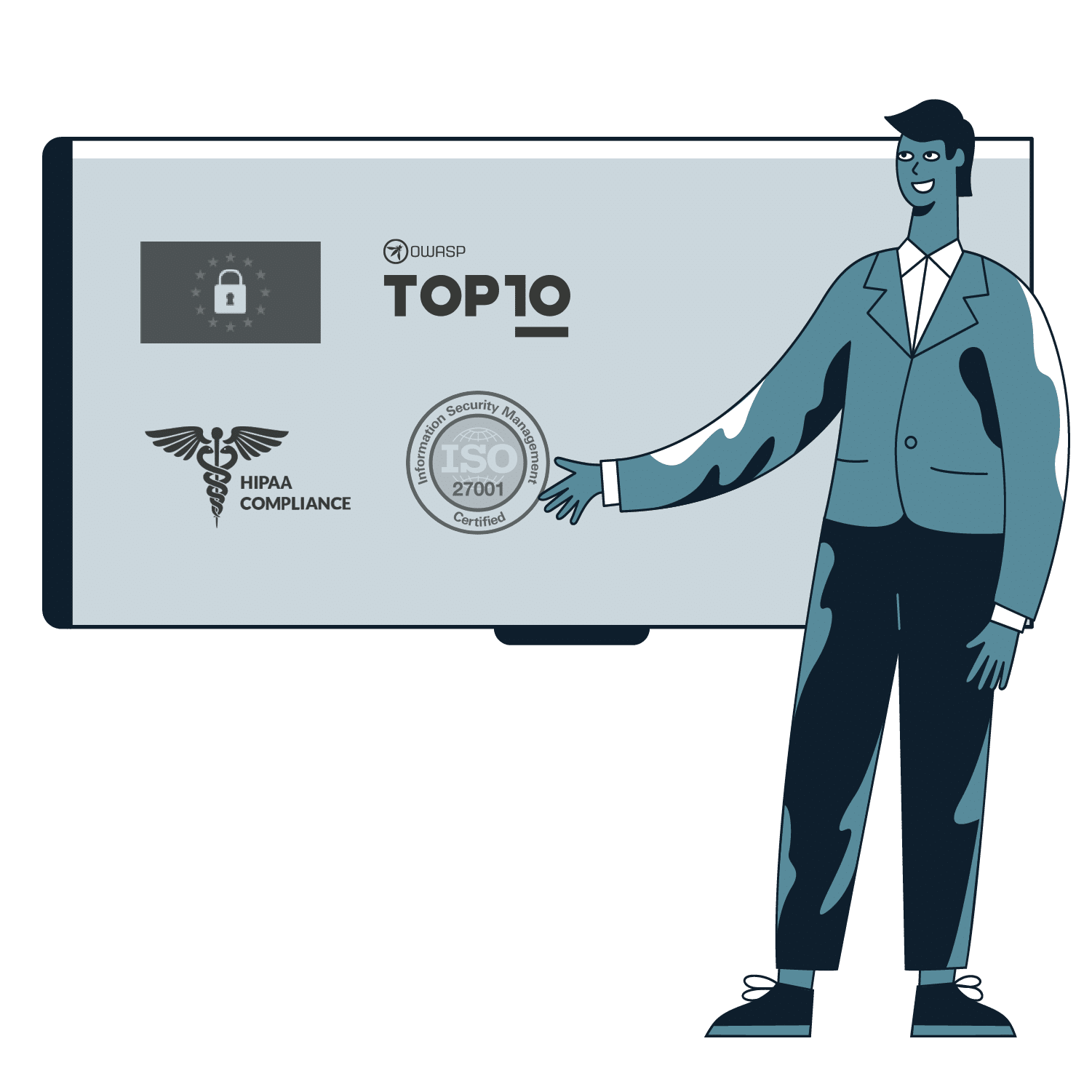

Enterprise-grade security

& compliance

-

Secure infrastructure

ISO 27001 certified delivery centers in Canada, Egypt, India, Romania

(five total certified sites) -

Data privacy by design

GDPR, HIPAA compliance.

PII redaction, secure file handling, VPN/VPC options. -

NDAs and legal coverage

We support your preferred legal framework or provide standard NDAs.

Discover AI data collection and annotation best practices for Generative AI

Real-World use cases for training data for Generative AI

Real-world generative AI projects we support – across industries and functions.

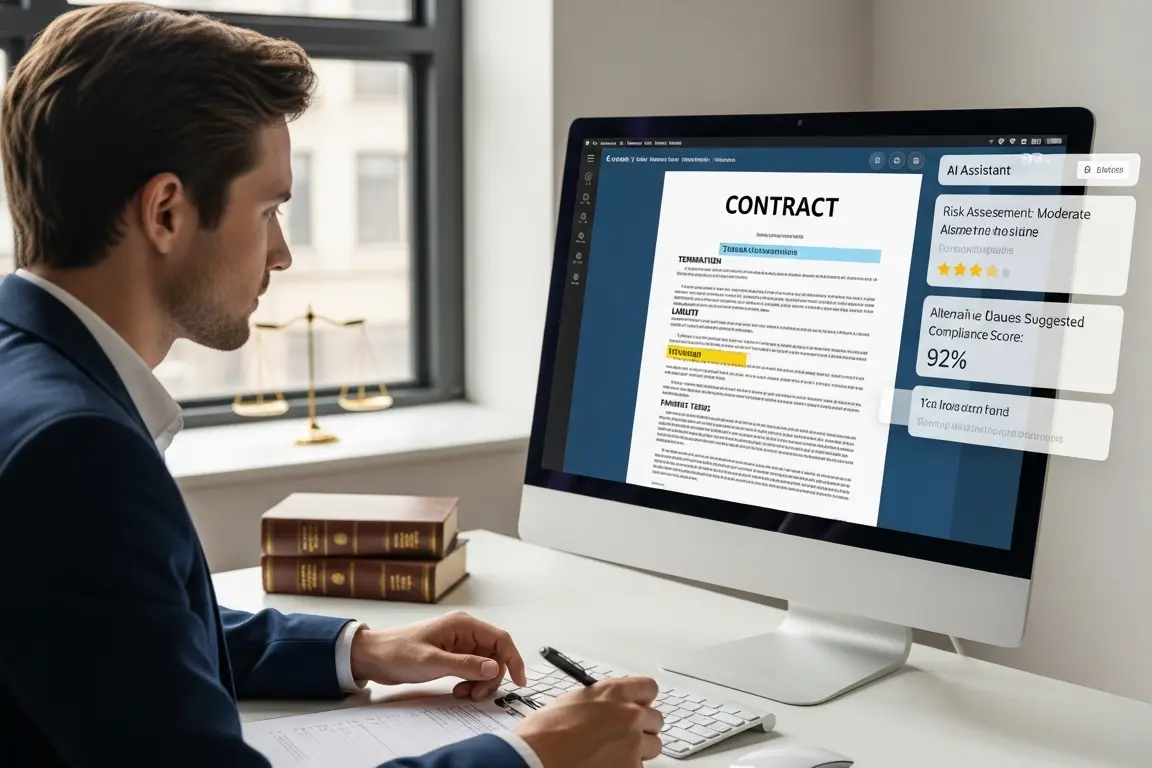

Legal GenAI Assistants

Train LLMs to handle contracts, compliance, and case summaries with confidence.

→ Domain-specific text creation, document chunking, RLHF, ethical evaluation

Medical Knowledge Models

Support diagnosis, patient guidance, or drug discovery via safe and accurate GenAI.

→ Expert-generated text, bias review, red teaming, multilingual QA

Retail & eCommerce Personalization

Generate product descriptions, marketing content, and conversational shopping agents.

→ Segment-specific prompt tuning, tone/style evaluation, UX feedback scoring

Enterprise Document Chatbots

Build secure GenAI solutions for finance, HR, legal and knowledge bases.

→ Semantic chunking, retrieval optimization, hallucination detection

Text-to-Image Model Tuning

Improve visual outputs for brand alignment, accessibility, or culture fit.

→ Image collection, caption correction, output scoring across markets

Voice AI / Emotional Speech

Train emotionally nuanced, multilingual voice assistants or IVR systems.

→ Speech data, emotion labeling, prosody analysis, TTS evaluation

Enterprise case studies using LXT’s training data for Generative AI

FAQs on our training data for Generative AI services

Pricing depends on multiple factors including data modality, project complexity, quality requirements, language coverage, and delivery timelines. We’ll provide a detailed quote after a short scoping call to understand your needs.

Yes. We support mutual NDAs and can align with your enterprise data-handling requirements. Our team also works within secure VPC/VPN environments where needed.

We use a multi-layer QA process including gold tasks, expert calibration, reviewer validation and accuracy tracking. Each project includes pilot testing and real-time quality monitoring.

We support LLMs, SLMs, VLMs, RAG architectures, and personalization models across all modalities. Our services are tailored to your model type, domain and region.

We typically launch pilots within 1–2 weeks of scope approval. Production timelines depend on volume, QA depth, and languages but are designed for rapid scale-up.

Yes. We provide red teaming, adversarial prompting, and ethical AI assessments using diverse human reviewers to surface bias, hallucinations and content risks.

Ready to Elevate Your GenAI Performance?

Let’s scope your project and get you the training data for generative AI you need – human-validated, secure, and tailored to your model architecture.