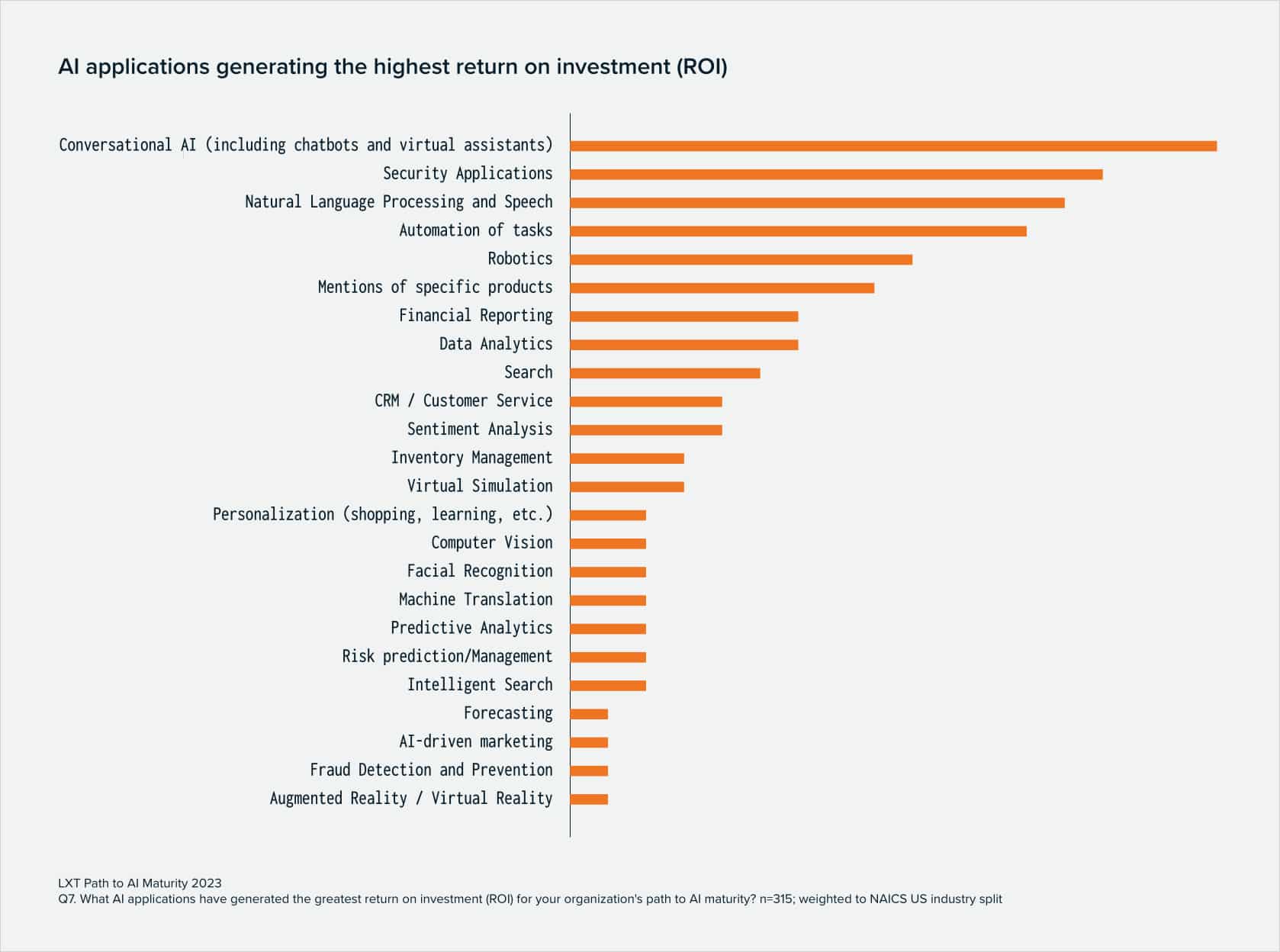

Welcome back to AI in the Real World. As we gear up to kick off the next round of our Path to AI Maturity research, I was reflecting on a finding from the study we conducted late last year, in which we asked respondents to identify which AI solutions generated the highest return for their company. Taking the top three spots were Conversational AI (including chatbots and virtual assistants), security applications, and natural language processing/speech applications.

The answers weren’t all that surprising given how many businesses use these tools, but it got me wondering how, and to what extent, generative AI has impacted these solutions, now that it has burst onto the scene.

Generative AI is most often associated with using AI to create content, so it might be easy to think that it couldn’t directly improve upon tools that are designed to defend against cyber attacks or to facilitate communication. But what I found proved to the contrary: the same fundamental advances that make tools like ChatGPT and Bard to be the game changers that they are can also be applied to more specialized applications of AI.

Improving the conversational performance of chatbots and virtual assistants

Early versions of chatbots were rules-based and locked into the conversational flow for which they were trained. Recent improvements in conversational AI and natural language processing have made huge strides in chatbots’ ability to sound more human, but asking a chatbot any question or statement outside its predefined rules would still lead to a dead end. Anyone who’s spent much time working in this space has heard stories about how customer experiences went sideways thanks to a broken chatbot conversation.

Now generative AI is enabling chatbots to be more flexible and respond to customer queries in a more nuanced way. Instead of being at a loss for words when a query strays outside of training, chatbots that are powered by generative AI have the potential to curate technical content from an organizational database, or from the archives of reconciled service calls.

An early example of this is Duolingo, an organization that prides itself on creating fun and effective learning experiences. Duolingo augmented its latest release, Duolingo Max, with GPT-4, which powers two new chatbot features: Explain My Answer and Roleplay.

Explain My Answer offers students a lesson on proper word choice, and students can engage a chatbot within the content of the lesson to ask whatever follow-up questions come to mind. This can be very helpful whether students repeatedly make the same mistake, or simply need further elaboration to clarify the nuances between different words.

Roleplay provides language learners with real-world scenarios in which they carry-on conversations with chatbots and gain experience points. Thanks to features like Roleplay, Duolingo Max provides an interactive and responsive experience based upon lessons and scenarios that are created by experts in curriculum design.

Envisioning more secure mobile platforms

There’s no denying the allure of generative AI. Getting an informed, near-instantaneous answer that’s based on all of the information available online about a particular topic can be incredibly empowering. But any scenario that involves submitting your personal or business information out into the cloud is inadvisable.

For their part, security professionals have wisely cautioned against submitting ANYTHING about themselves or their business to generative AI tools, and to adopt a zero-trust security stance instead.

Qualcomm is taking a more visionary approach by inviting readers to imagine a world where an on-device generative AI security application could act as an intermediary between your device and the online services you use throughout the day. Generative AI security apps like these would protect your personal information, while enabling online services to securely access said information when consulting a financial adviser or making dinner reservations online, for example.

It’s anyone’s guess how much work needs to be done before such a vision could become reality, but Qualcomm’s idea for the future is certainly more compelling than the status quo. Meanwhile, cybersecurity research firms such as Tenable Research, are experimenting with how they can use large language models to streamline their work when reverse engineering, debugging code, improving web app security, and increasing visibility into cloud-based tools.

Perhaps the two companies can work together and meet somewhere in the middle?

Creating better user experiences through created/cloned speech

Smart assistants first came on the scene in the mid 90’s, but, as is often the case, the early technology was a bit rudimentary. Since then, large language models, and advances in natural language processing and deep learning have led to significant breakthroughs in the capacity of computers to understand and recreate text. We’ve all seen the astonishing ability of generative AI to summarize existing content, as well as to write original works.

The same technologies have made it much easier to create and clone human speech, a process that used to require a great deal of training, with results that weren’t terribly convincing.

In just the last few months, Meta has released generative AI systems with text-to-speech capabilities that can edit an audio clip using text-to-speech capabilities, or generate a voice in a wide variety of styles. Meta AI’s Voicebox models can also take a portion of text in one language and translate it into speech in another language. Google’s Audio LM offers many of the same capabilities.

Generative adversarial networks enable you to clone a voice and iterate upon it until you have a version that’s virtually indistinguishable from the individual’s actual voice. And products such as ElevenLabs or Podcastle offer tools such as text-to-speech software that can create life-like voices, or that will clone your own voice by reading as little as 70 sentences.

Cloning a voice brings with it many benefits and threats, as spelled out recently by global consulting firm, Ankura. For example, with the ability to recreate their voice, people who can no longer talk due to medical reasons could benefit dramatically from an improved quality of life. Companies can reduce operating costs and streamline call center operations by deploying virtual assistants. And Hollywood can bring back characters that were voiced by actors who have since passed away.

With advances like these, we may have finally reached the point where voice interaction seems possible AND preferable. Not only is voice interaction faster than typing, it’s also safer, can make online searches more effective, and could give way to new use cases for technology.

On the flip side, there are many threats, virtually all of which fall under the heading of security attacks targeting individuals and businesses. In anticipation of this, Google is working on another language model that will be able to detect any audio snippets generated by its own AudioLM speech model.

This is a lot to take in, and breakthroughs in generative AI seem to be coming at a faster pace than anything we’ve seen before, at least in my living memory. It might be awhile before advances like these reach your bottom line. Either way, stay buckled to the edge of your seat.